Rush

Recommender Systems have come a long way: while initially conceived as a way to handle email information overload by collaborative filtering, they soon were adapted by online retailers (most prominently Amazon.com) to increase sales. With this history, recommender systems continued to be used mainly in web interfaces and for reducing large data sets to well-chosen subsets in order to conserve bandwidth and prevent information overload.

Despite broadband internet connection and increased processing power in mobile devices, explicit research on user interfaces for mobile recommender systems is scarce: existing systems mostly rely on established desktop interaction metaphors (e.g., critique-based recommendation) and examine issues of mobility such as loss of connection and decentralization. Peculiarities of mobile device interaction, such as occlusion problems, the influence of the reduced screen space and possibly abrupt endings (e.g., when the bus arrives at the station) have mostly been ignored.

Ward et al. presented Dasher, a visual tool for text entry based on language models that has also been successfully ported to Pocket PCs. A continuous gesture allows selecting letters to form words and sentences. The underlying language model is used to enlarge more probable items and make selecting the correct one easier. With up to 60 words per minute in its original version, it is an efficient way to enter text. Despite being used in a variety of other ways (e.g., with an eye-tracker), the original task of text entry has never been changed, though.

Rush: Repeated recommendations allow quickly creating personalized music playlists.

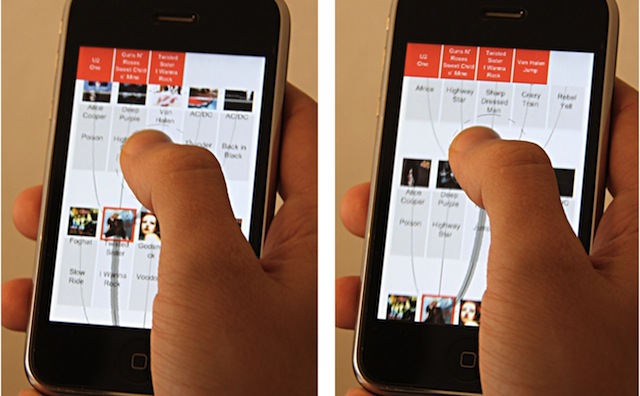

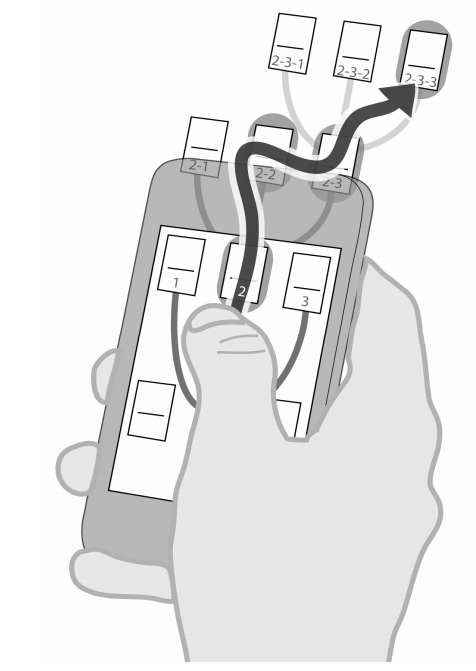

The rush project, a variation on Dasher, is an interaction technique for mobile touch-screen devices for repeatedly selecting items from a set of recommendations. Similar to Dasher, rush's interaction takes place on a virtual two-dimensional canvas. Starting from a seed item, related items are selected by the underlying recommender engine and displayed close to it. The user can then select one of these suggestions, which in turn generates recommendations related to this item. This iterative expansion of a recommendation tree continues until the user is satisfied with the set of selections. Navigation and selection happens with a single finger gesture: the canvas moves below the finger depending on the distance and angle to the screen's center. For example, the user's finger in the upper right part of the screen causes the canvas to slide towards the lower left. To allow fluid gestures and prevent the need to lift a finger, we used crossing gestures for the selection of items instead of pointing. Selecting an item in rush is performed by drawing a line through it. In theory, the user's interaction thus limits itself to moving the finger on the screen: putting the finger down starts the process and lifting it again means the collection is finished.

Tech