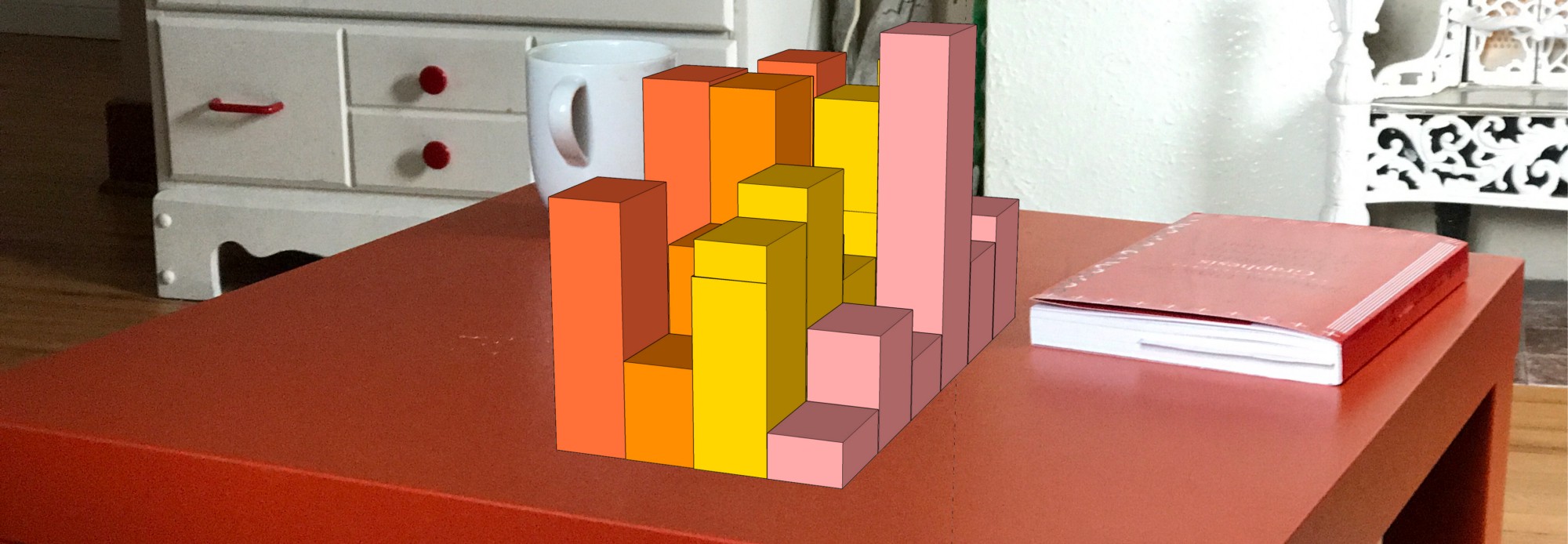

(Above: Photo by Osman Rana (Unsplash), augmented by the author)

Data visualization on mobile devices seemed promising, since the time of the first iPhone: very capable portable computers! Innovative touch interaction! Highly localized content! Hundreds of visualizations for mobile devices exist, both as apps and part of daily news content. But there’s one major problem that mobile visualizations couldn’t shake yet:

There’s just never enough space.

Mobile displays are necessarily small to be portable, and then there’s also fingers in the way. Usually with data visualization, more screen space means better analysis: Data can be shown at a higher resolution, uncovering smaller relationships and parts of the data. It also becomes possible to show multiple charts side-by-side and having coordinated views, quickly flicking to and fro from one lens on the data to the other.

It’s the difference between having your chart on a sticky note or across two side-by-side posters.

I think that Augmented Reality (AR) could solve this problem.

AR has mainly been a research direction for the last thirty-odd years, but is now slowly entering the tech mainstream. AR overlays reality with virtual information, which appears to be part of the environment (depending on your company affiliation, the principle could also be called Mixed Reality). Think: Snapchat Lenses with face tracking, Microsoft’s HoloLens letting you play Minecraft on your couch table or Apple’s recent ARKit.

When AR was initially proposed, headsets were usually bulky with low resolutions and low refresh rates. Hickups between head movement and on-screen content made it hard to keep up the illusion of actual virtual elements in your physical world, maybe even leading to cyber sickness.

Smartphone production and improvements in technology leading to lower prices for better components have both revolutionized Virtual Reality (see Oculus) and also Augmented Reality — since they’re both building on similar technologies (small, high-resolution portable displays, head tracking, etc).

So while our current AR is mostly based on the peephole metaphor (you’re looking through your phone to see face filters or Pokémon), future AR - the one we’re interested in for this article - should work with a headset only — hopefully very non-intrusive glasses — leaving your hands free to interact with (augmented) reality.

So how could this future AR solve the lack of screen space for mobile visualizations?

By augmenting your reality, AR puts screens everywhere and nowhere at the same time. Screens become fully virtual — with all advantages. Lack of screen space no longer exists, since these virtual screens can potentially fill your whole field-of-view (and beyond).

Plus, just like other mobile devices, AR devices know where you are in the world (thanks to geolocation) but even more: where and what your are currently looking at! Combine that with automated object recognition and you have all kinds of fascinating applications for datavis opening up.

To be more specific, I can see three promising directions for these future Augmented Reality visualizations:

Situated personal visualizations

One of the promises of mobile visualizations has always been creating a personalized experience of data. Various apps make use of your location to e.g. center a map on your current position (Google Maps), show restaurants around you (Yelp) or keep track of your running routes (Runkeeper).

AR visualization has the potential to become even more personal: knowing about your preferences and goals, it can display the right data at the right time. But what’s even more interesting is that everything can be situated in the right place:

AR Visualizations become part of your environment, augmenting real-world objects (and people) with relevant information, placed at just the right positions. Visualizations are no longer images displayed in little glowing boxes, but augmented textures to the world.

Imagine trying to make your way across Boston in a snow storm. Your app knows exactly where you want to go and can draw from relevant information from the internet. It also knows that you’re looking at a bus right now and helps you in making decisions: should I get on the bus or take an Uber? Do I have to hurry? Where do I have to transfer? How long will it take? And will this snow ever end?

All this information is right where you need it and completely private — no one else can see what you see. It’s the ultimate expression of personal visualization.

I like to see this development as a form of empowerment, if we get it right (if not: see the works of Keiichi Matsuda or various dystopian SciFi).

Similarly, AR vis can show data that’s relevant to you but maybe not to everybody.

You might be wandering along the aisles of your supermarket, being bombarded by messages of abundance. Package design in recent years hasn’t necessarily developed towards making nutritional information easier accessible. I value the hours I spend staring at small print labels, results of months of heated discussions between industry and administration.

While there are databases for nutritional information freely available, typing product names into a search box, selecting the right one, checking the info and repeating it for every sparkly box on the shelf sounds … unappealing.

What if the machine could help you with that, take your own preferences when it comes to nutrition (and dietary restrictions to boot) and display a simple heat map, pointing you at just the right products? Once this filtering is done you can still go through the final candidates and make your own, much more informed, decision. And everyone gets their own custom heatmap, since there’s more than enough space for that in virtuality.

What all these projects have in common, is that they’re mainly interfaces for data access. The info is usually somewhere out there on the internet but only accessible via text search or obscure API calls. The beauty of AR with its camera-based object recognition is that the whole world becomes the interface to this data: just look at something to receive more information.

Arguably, the same way we did information access since the dawn of time.

3D visualizations

You heard me right, I’m also crossing that line here: I think that 3D visualizations could potentially be much more useful and even become mainstream with Augmented Reality.

3D visualizations are shunned in the datavis community, fueled by scores of bad 3D Excel charts and blatant marketing deception. But if you look at the perceptual science behind them, they might actually not be that terrible (see Robert Kosara’s great discussion on his blog). Sure, they suffer from occlusion and perspective distortions, but maybe the additional spatial dimension might make up for that. Especially when we combine them with the most important feature of AR: situatedness in the real world.

Imagine having your awful awful 3D bar chart situated on your coffee table, right in front of you. There’s occlusion (front bars occlude back bars) and distortion (back bars look relative smaller).

A 3D visualization of me dragging boxes in SketchUp

But in AR, the main problem of 3D vis - the virtuality of it all - is less pronounced. The abstract bars become parts of your environment — it’s clear that the bars in the back look smaller than they actually are (compare them to the physical book next to them). Similarly, occlusion is easily solved by moving around the table, just as you would in the real world. Additional stereo cues (hard to show in a 2D photo) make the virtual bars seem more real than they are.

This follows the ideas of Embodied Cognition, a theory that postulates that our cognition is much more closely coupled to our bodily existence than might be apparent. With AR, you still have your own body available to you to explore data as part of your environment. This is in contrast to Virtual Reality, where you’re completely isolated from both your environment and your body — which can be highly disorienting.

Sporadic research here and there points at AR 3D vis being a promising direction (Ware and Mitchell created a highly efficient 3D node chart in 2005, and there’s a workshop series on Immersive Analytics), but I figure that the proliferation of AR toolkits will lead to a lot more results in the near future.

And yes, there will be the trend of cramming the first AR apps to the brim with flashy 3D stuff. Just like the first new 3D movies insisted on always throwing virtual objects at the audience. But that will subside eventually and make 3D a viable approach in the data visualizer’s toolkit.

Floating screens everywhere

Finally, AR solves the Mobile Vis dilemma of never enough space by simply letting you create as many arbitrarily-sized and -shaped displays as you need. Free-floating around you, still 2D, but as big as you need them.

Since full AR devices have their physical screens right on your nose, they can create an infinite number of arbitrarily-sized virtual screens, situated in your environment. Head tracking lets you switch between them just as you would with physical screens: simply by turning your head.

These virtual screens are interesting when it comes to resolution — since they’re just simulations their resolution can be as finely grained as you need them to be. The physical resolution of the AR headset always stays the same, but when there’s only part of a virtual screen visible (since you’re standing close to one), the full physical resolution is mapped to this part of the virtual screen. This works well, since our eyes are not capable of seeing everything infinitely sharp. We usually move around our environment, coming closer for in-depth inspection. Same with virtual screens — while they might be relatively low-res from afar, you can move as close as you like to see infinitely fine details.

There is some ideas in this direction — Isenberg et al. describe “Hybrid-Image Visualizations” that show different types of visualizations at different viewing distances. Similarly, the static Fat Fonts encode multiple layers of values through symbols and brightness, showing different aspects for different viewing distances. And, of course, highly detailed paper-based visualizations inherently allow accessing the data at overview- or detail levels.

These virtual 2D screens are also a great transitional technology, until all legacy applications have been mapped to an AR-context (if that ever happens). You can think of them as virtual monitors, same as your regular physical ones, just instantaneous, free and 100% eco-friendly.

And another advantage, especially when it comes to visualizing sensitive data — be it personal or business — in a public setting: they’re completely invisible to everyone else. The problem of “shoulder surfing” does not exist in AR, so no one can see what’s on your virtual screen, even though it might fill your whole field-of-view¹.

Shut up and take my money

So, how much longer until we can get to work on AR visualizations?

The release of tools like ARKit will make powerful AR apps much more common in the next years. Another nice side-effect for Apple is that once display technology becomes mature (and especially thin) enough that they can release their own set of AR glasses (which their patents point at), they already have an App Store full of AR-compatible apps.

Microsoft’s HoloLens is already available, not very portable and dorky, but almost magical when you put it on. Google has Daydream and Tango, which might make a powerful combination for Augmented Reality. We’ll see which of the tech giants will be the first to bring AR to the mainstream.

But in any case: smartphones are a transitional technology — we’re actually working towards a world without screens, where everything is a screen. And in this world, all the screen space you could ever want for your data visualizations is instantly available.

(This is part 1. Read part 2 on creating silent augmented reality here).

Notes:

¹ Admittedly, shoulder surfing might be possible for someone standing so awkwardly behind you that they can read what’s on the inside of your high-resolution glasses. All the spoils would be deserved.