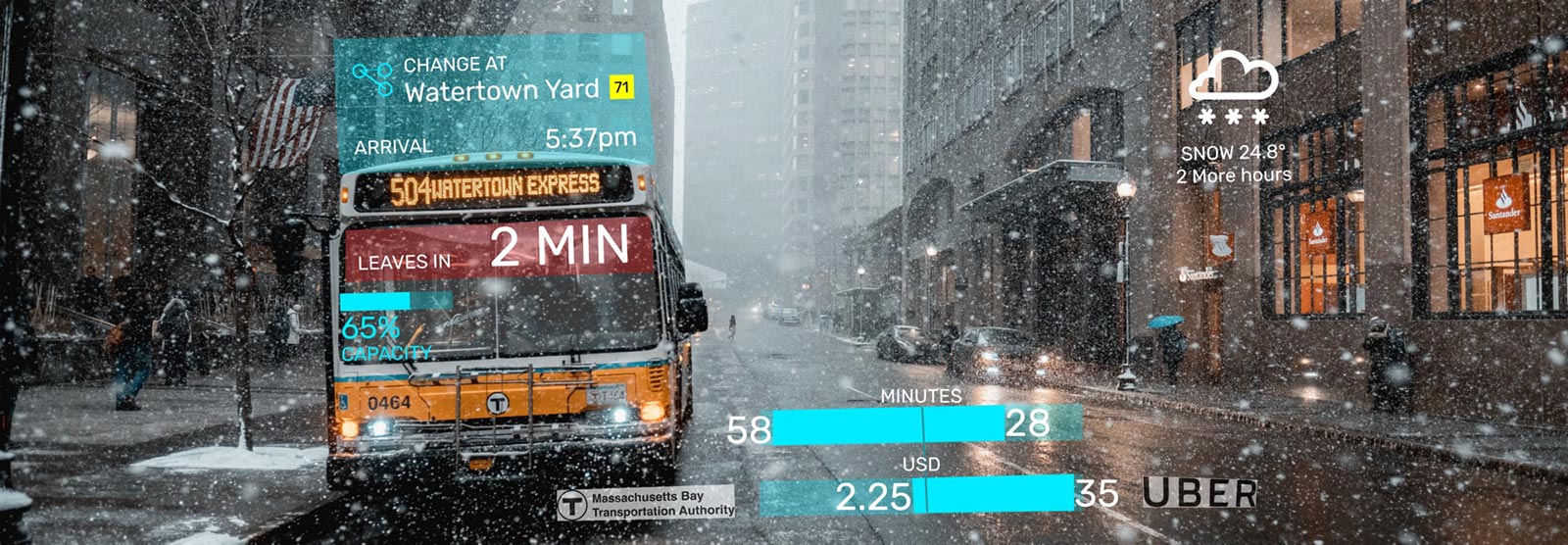

(Image above: still from Keiichi Matsuda’s HYPER-REALITY)

(This is part 2. Read part 1 on augmented reality visualizations here)

Every time I read some article on Augmented Reality or see it pop up in a movie/TV show, it looks like the picture above.

Nothing screams future like neon colors and flashy animations. And I don’t excuse myself for that: even in my last article on how to bring data visualization to AR, my examples were high in the neon department to make it obvious at first glance that This Is The Future™:

It reminds me somewhat of ‘90s web design, back when blinking text and construction GIFs were all the rage.

Now, while this type of augmentation might be clearly visible and extremely flexible (pack all your data into whatever representation you want), it is also highly

distracting:

▶ Primary colors and animations are geared towards catching our attention. Actually focusing on tasks at hand might become hard in such an environment (imagine reading a scientific paper in a casino).

▶ Virtual objects overlap physical objects. Which might be clearly a problem when the hidden physical object is a car speeding towards you, but can also be annoying in more benign situations, like searching for your car keys that are hiding behind a virtual bar chart.

So what to do about these issues?

The whole premise of this article and the one before it was that near-future AR setups couple see-through-glasses with automatic object recognition. Which also means that these systems can shape your reality in any way they want.

They don’t have to display neon-blue blinking squares but can create whatever they want and overlay it on your reality.

How about using these powers of augmentation to create silent augmented reality? Augmentation that helps you, but becomes (mostly) seamless, blended into reality. No more constant overwhelm with animations and garish colors, but additional, useful, non-distracting information.

Realistic virtual objects

While it might seem required to reach Cyberpunk cred, Augmented Reality doesn’t have to display anything neon.

Computer graphics have come far enough in the last decades to generate seemingly realistic objects and use them to represent data.

While we could, of course, just throw a neon-blue bar chart into the reader’s face, we could also use their current surroundings for inspiration to show something like this:

The world's most serene bar chart

Imagine those rocks being created by the AR engine and only visible through glasses, which means they’re just as unreal as any of the neon AR we’ve seen before. Still, they’re fitting much better with their environment, creating none of the jarring reality breaks. And they’re just as good at their job of displaying data.

If the AR system is aware of a person’s surroundings it can react with suitable virtual objects. There’s massive libraries on the web filled with realistic 3D models of rocks, seashells, books, small animals etc etc. — all suitable objects to be used for visualization. Combine those with some realistic looking shadows et voilà. And yes, there are even 3D models of pies to be used for you-know-which-chart.

To uphold the illusion of reality, these virtual objects should appear real at first glance, which makes shadows, occlusion, and perspective important. But other aspects of their behavior also have to be tightly controlled: your beach rock bar chart can’t just appear out of thin air. The virtual rocks might either drop from the sky — or maybe less irritating — slowly grow out of the ground. Physics engines for video games seem like a great fit for these problems.

If we loosen our rules a bit and allow displays that consist of seemingly real objects but are definitely nothing that you would see when you take your glasses off, other interesting things become possible.

Virtual planes demonstrate the business of an airport over the day, just as virtual birds (in conjunction with their originators) show their flight paths.

While these examples were constructed through photo manipulation (either by directly stitching together photos of planes or combining video frames with a filter), one could imagine them happening in real-time and augmented reality.

But we don’t have to stop at adding realistic virtual objects. AR can just as well be used to distort and manipulate the real world itself.

Reality distortion

Given that our Augmented Reality system can recognize real-world objects and create arbitrary graphics to overlay on top of them, something even wilder becomes possible:

Augmented reality is not restricted to creating virtual objects situated in the real world. It can also manipulate real-world objects to make them (seemingly) shrink, grow or even disappear.

Maybe you have an ugly dent in your car: since your AR glasses track where you’re looking, they could display an overlay of perfectly shiny car paint overlaying the dent, thus making it disappear. Similarly, objects such as the dirty laundry on your bedroom floor could be hidden by overlaying a texture of the clean floor on top of it.

And once these real-world objects are (apparently) gone, the system can generate a new representation of them — manipulated in size, shape, color etc. — and display it on top of the (hidden) object to encode data.

In case you want to declare me completely crazy now, watch this short demo by Laan Labs, running on an unmodified iPhone using the new ARKit:

Laan Labs: ARKit iPhone Levitation take 2

And this is just the current state of this trick. Imagine what it will look like in ten years time.

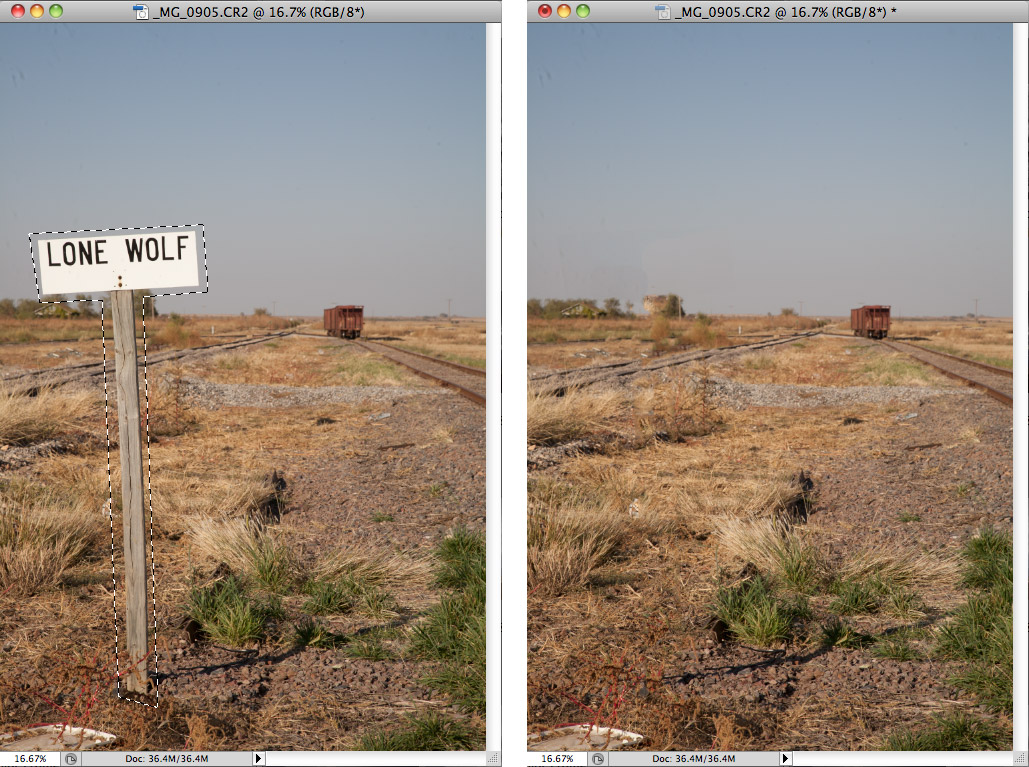

This reality distortion requires object recognition as well as the ability to fill in occluded (and thus invisible) backgrounds. All things that even today’s image manipulation software is already able to do. Think Photoshop’s Content-Aware fill on your nose:

Once we have such a system capable of recognizing physical objects and manipulating our visual perception of them, we can create data visualizations without garish overlays or virtual objects: the actual world becomes our building material to map information to.

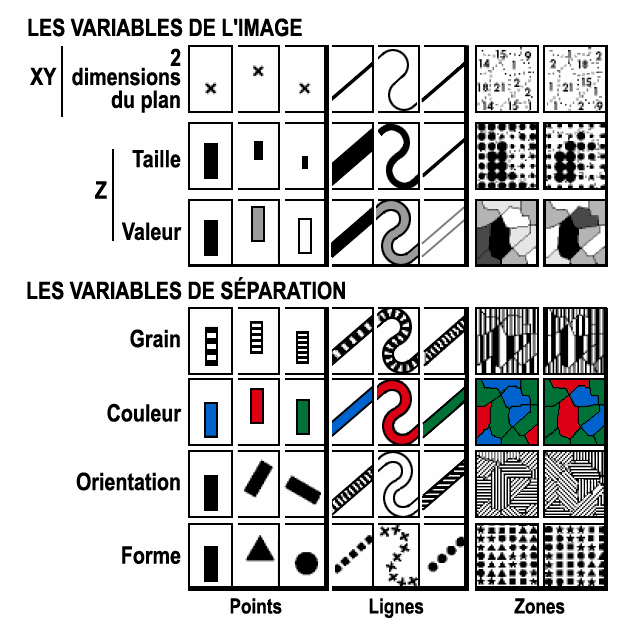

When we look at the basics of data visualization, there’s only a handful of so-called visual variables that let us encode information. They’re all based on our visual perception and which attributes of objects we can distinguish, such as position, size, brightness, area, color, etc.

Jacques Bertin: Visual Variables from ‘Sémiologie graphique’ (1967)

The concept of visual variables also makes it possible to take apart every type of chart into these basic building blocks: a scatterplot encodes data as horizontal and vertical position. We can encode an additional attribute for each item as size, thus turning it into a bubble chart and so on.

The process of data visualization is at its most basic just mapping numbers from the data to these visual variables.

With the idea of visual variables and a powerful reality distortion AR, we can create data visualizations from everyday objects:

Color + Brightness + Texture

It’s ok, I’m sure it’s just sleeping.

These are some obvious ones — just recolor real-world objects to encode data. The plant on your window sill which you’ve ignored for days might already show hints of becoming brown, but AR datavis can really emphasize its sorry state, hopefully spurring you into action.

To improve its chances, the AR could also enhance its brightness, making it stand out more among all the other objects by your window.

Finally, AR could also change its texture, making it look more stripey or spiky, whatever might grab your attention —maybe even displaying snake-like animations slowly flowing along the plant as a last resort.

All these visual manipulations do not require getting rid of the actual object by hiding it behind some simulated background. It’s enough to create an overlay in the right size and shape on top of the actual object. The data we visualize (e.g., the dryness of the plant’s soil) determines the amount of color, brightness or texture.

To make the most of a data visualization, you’d probably also want to compare multiple objects — maybe see which of your plants actually needs water the most — something which should work well even with just digitally changing their color, brightness or texture.

Size + Orientation + Shape

These visual variables are where we’re moving from subtle augmentations to more drastic ones. By hiding the actual objects and distorting a virtual representation of them, AR can modify an object’s size, orientation or shape for data visualization.

Visualizing data in a supermarket doesn’t have to happen with a heat map as in the example above. The objects themselves could also be visually distorted to reflect data.

Maybe the neat rows of products become bar charts themselves, by distorting the height of a stack of butter.

They could also shrink in size to represent how far they had to travel to get there, borrowing a perspective metaphor (and making selecting local products much easier).

And in addition to that, they could also slightly rotate or even change their shape to encode information — be it nutritional or about their prize.

Position

The most powerful and easy to read visual variable — position — is the one which should probably be used the most sparingly in AR datavis.

All of the above reality distortions make interaction with those (actual, physical) objects already somewhat harder. It becomes difficult to take a product out of the supermarket shelf if its size or orientation has been manipulated.

But what’s arguably worse is having these products floating around in space, or moving unpredictably across the shelf. What helps with readability in a datavis chart might not necessarily help with interacting with the real world.

Combine that with an additional array of the virtual realistic objects from above, and we’re deep in confusion country.

Reality Hacking

What all of these ideas have in common is that they’re messing with the basics of our perception. Things we’ve learned since our earliest days — that most of what we see is real, that objects can be grabbed and interacted with — no longer applies, when half of the objects are virtually generated and the other half is thoroughly distorted.

These extended Augmented Reality techniques are reality hacking. Our own version of reality becomes not only machine readable but also writable — with all consequences. Which should lead designers and technologists to tread very very carefully and ask themselves the right questions before every decision.

Being the technology optimist that I am, I hope that early reflections such as this one can push us and our technology to a good outcome. The more we reflect now on the implications of this technology, the less mistakes we’ll make once AR glasses with perfect object recognition and manipulation are on everyone’s noses.

So that our AR future may look more like this:

than this:

I’m deeply grateful to Alice Thudt for her brilliant suggestions and comments on the

article.